Many photographers found it easy to scoff at generative AI when it was being produced by programs with names like DALLE and Midjourney. But now, Photoshop — the household name in photo editing that even non-photographers use as a verb — has not only joined the game, but has already had a hand in more than 900 million AI-generated images. The latest edition of Photoshop AI tools to arrive on the beta version of Photoshop is Generative Expand, a tool that aims to ease photographer’s aspect ratio woes by filling in the edges to expand an image. But, the more I look into Photoshop’s new generative AI, the more I realize that Adobe is at least considering the ethical ramifications of such technology.

To answer the question of whether using AI in an image is ethical, I believe photographers need to look at three things: How the AI is being used, how the resulting image is labeled, and how the training images were sourced. While I know many photographers are disheartened to see the leading photo editor leap into the generative AI that has many artists and graphic designers worried about their future, Adobe, at least on the surface, appears to be at least pondering those those three vital questions.

Table of Contents

How Photoshop generative AI is used

Currently, the beta version of Photoshop allows editors to generate objects to add into an image, remove existing objects from an image, and fill in the edges of an image. The ethical ramifications of this technology sit on a sliding scale, with un-cropping a photo at the low end and creating a fake image to fool the internet on the high end. At first glance, that’s concerning for a number of different reasons, including the already rampant doctored images supporting fake news sites and the number of artists mis-labeling graphic art as photographs.

However, the tech savvy could already generate objects that didn’t exist into an image, remove objects that did exist, and fill in the edges of a photograph. In fact, the Photoshop-savvy have been doing those things for decades. The only thing that has changed is the amount of time and expertise required to do those things. Can Adobe Firefly be used maliciously? Absolutely. But, so can plain old Photoshop, as evidenced by the number of faked images floating around the web before generative AI existed.

The biggest remaining concern, then, is that the average person could now easily add objects and remove objects from a photograph, whether that’s to create a fantasy illustration or to maliciously spread fake news. That’s where labels come in.

How Photoshop AI is labeled

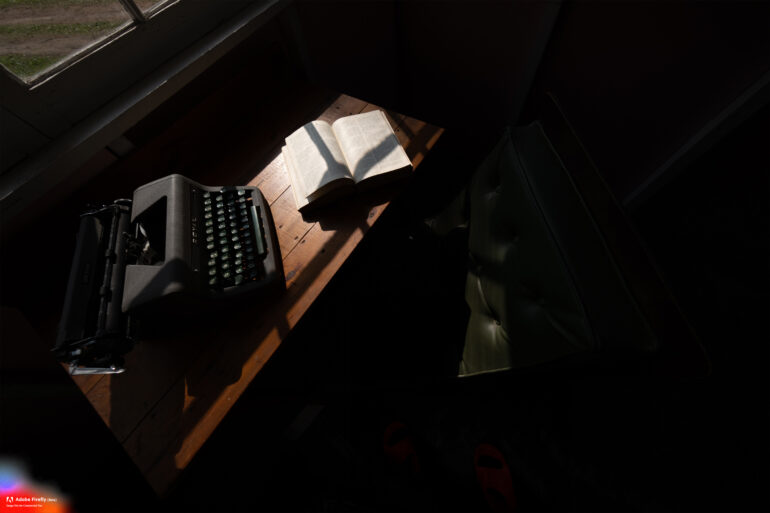

I went over to the web version of Firefly and tried adding objects to my images. First, I tried adding a moose to a Northern Lights photo. I also used the web version of Firefly to remove an object. (For the record, the added moose wasn’t done very well, the sharp edges not matching the blurred rocks where he was placed.) On all three images, the photos were downloaded with a brightly colored Adobe Firefly label in the corner, clearly designating the image as AI-based and not for commercial use.

Could I open that same photo in actual Photoshop and remove the label? Absolutely. It took less than 10 seconds to simply crop that well-intentioned label out of the image. Even free photo editors can easily crop an image. That label may stop those who don’t actually stop to think about the ethical ramifications, but it isn’t going to do anything besides waste 10 seconds of the malicious fake generator.

The AI label is of a different sort, however, for those that actually download the full beta version of Photoshop to a hard drive. These images are labeled with Content Credentials inside the images metadata. Rather than the glaring obvious logo on the corner, this information is only available when viewers dig into the metadata, either using a program like Photoshop or a free website.

One of the many reasons fake news spreads so quickly is that there’s often little time between seeing the image and tapping that share button. While the Content Credentials will be a great tool for journalists, photo editors, photo contest judges and the like, the mass public likely won’t use the tool (if they even know that it exists). While Content Credentials are a good first step, I don’t see it as making a drastic impact.

How Photoshop AI is trained

Many generative AI programs are trained using random images found on the web, with little regard for copyright. While the law is murky on using a copyrighted photograph for software training, there’s a well-defined line between right and wrong for photographers and other artists. And on using copyrighted images without permission, that is a large, resounding no.

Adobe says that the images used to train Firefly come largely from Adobe Stock, where AI training is listed in the licensing agreement. Adobe also lists “openly licensed content and public domain content, where copyright has expired” as sources used to train its AI programs. That illustrates the importance of fully reading and understanding a licensing agreement. But, it shows that Adobe is more ethically picky when it comes to training data.

The big question remains — is Photoshop Firefly, the generative AI program, ethical? The answer, sadly, is that it depends on who is using it. But, that’s also the same answer as the ethics of plain, old, non-AI Photoshop.

Can Adobe Firefly and generative AI Photoshop be misused? Yes, quite easily. But, the tech savvy were already capable of using Photoshop to fake images. The only thing that has changed is that it’s a little easier and faster to do so.

But, I think that Adobe has more ethical boundaries in place than most other platforms. Photos are labeled — though far from foolproof. Source images are taken ethically. Can Adobe improve before bringing Firefly out of beta? Absolutely. An Adobe executive’s response to the concern over fake images was that “we’re already in that world.” A response that, to me, feels a bit glib.

But, sadly, the original generative AI platforms set the ethical bar very low — surpassing those original expectations wasn’t terribly difficult to do, at least not for a company whose very income comes from the artists that concern themselves with how AI affects their job security. Adobe Firefly has more ethical considerations in place for it’s generative AI, though with the bar set so low, those limitations are far from fool-proof.

While Firefly images are labeled and sourced from properly licensed images, ultimately it is up to both the artist and the viewer to use the tools responsibly. The artist, to use proper labels and not attempt to pass off AI generations as real photographs. And the viewer, to least question the image’s authenticity before hitting the share button.