“The idea is you’re looking deep into the heavens,” says Professor Aaron Roodman of the Dept. of Particle Physics & Astrophysics at the SLAC about the aim of the Rubin Observatory project. It began over two decades ago as an idea and is now nearing completion with the collective efforts of multiple teams and countries. I had a very fruitful interview with Professor Roodman the other day. He detailed the story behind the project and the benefits for astronomy that it intends to provide. During the course of the project, his team was responsible for the central optics system behind the telescope. An effort that culminated in the Rubin Observatory project being awarded the Guinness Record for the highest resolution sensor ever created for a camera. It’s also the largest digital camera ever built in terms of size, housing the largest lens ever made.

On a personal note, this interview was fascinating to be a part of. I got to spend an hour interviewing one of the brightest minds behind the Rubin Observatory project. I have been hugely interested in astrophysics since childhood (and also probably the biggest X-Files fan in the region). Listening to Professor Roodman explain technical details of the optical portions of the telescopes had me starry-eyed (pun unintended). This camera will likely stake claim to sensor resolution and size bragging rights for many years to come.

The scope of the Rubin Observatory project is pretty well defined, but the potential is seemingly limitless. It could end up photographing celestial objects and occurrences that may never have been documented before. Even more interesting is that good portions of these images and data will be available to the public for viewing and analysis. I wish I lived closer to California to be able to take a tour of the SLAC facility. For now, though, I will be eagerly looking forward to the day that the first images come from the Rubin Observatory telescope in 2023.

Dept. of Particle Physics & Astrophysics

Kavli Institute for Particle Astrophysics & Cosmology

SLAC National Accelerator Laboratory

The Phoblographer: Hi Aaron. For the benefit of our readers, please tell us what has been the Stanford Linear Accelerator Center’s involvement on the Rubin Observatory project (previously known as the Large Synoptic Survey Telescope – LSST) to date.

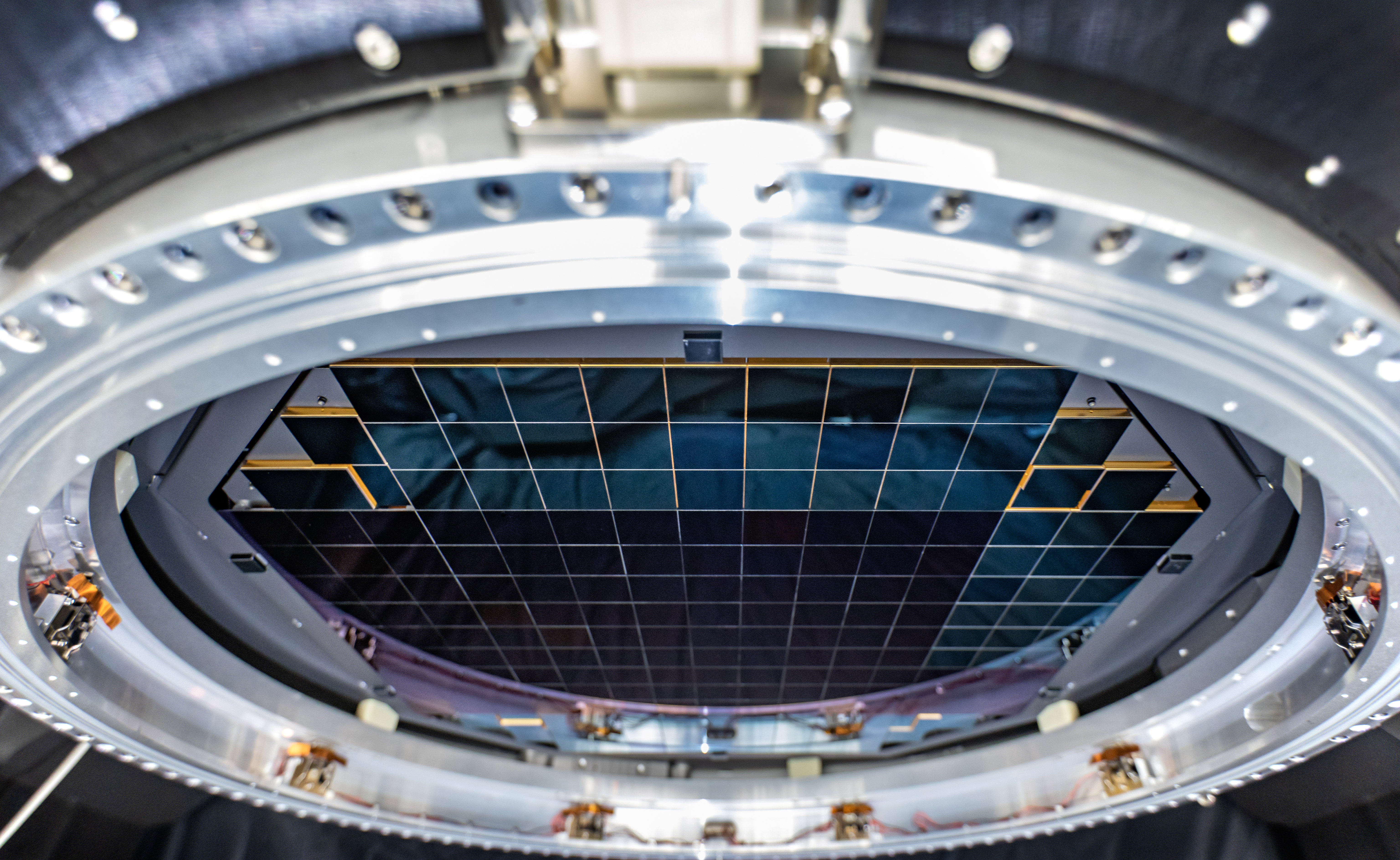

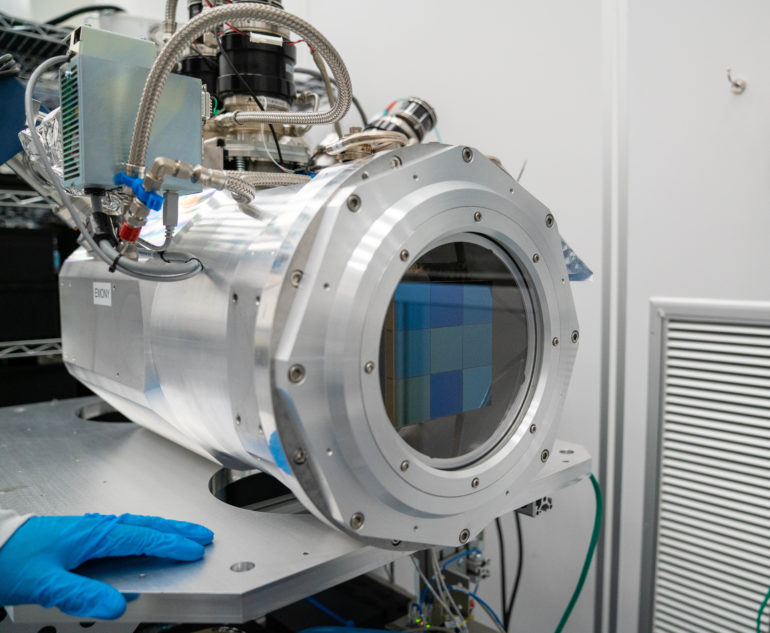

Aaron Roodman: We call it SLAC for short. SLAC is a national laboratory here in the US, funded by the Department of Energy. Our involvement in this project dates back to 2003, well before this project was funded. Through that time, we’ve been responsible for the camera. There’s another group that’s responsible for the telescope; well, we’re all one team, but kind of subgroups. So our team at SLAC is responsible for the camera. Some people at SLAC have been working on it since 2003. This is typical jargon for telescopes – the telescope often refers to the set of mirrors, and then there’s an instrument which may have its own optics and has whatever measurement apparatus you want. In our case at the Rubin Observatory, we only have one instrument – we call it the camera. It has three giant lenses and a 3.2 gigapixel focal plane.

The project was named after Vera Rubin, who was a very distinguished astronomer. She deserves a lot of the credit for discovering what is seen as some of the best evidence for dark matter in the universe. A very distinguished astronomer, she passed away a few years ago. There was a process; LSST, we decided it wasn’t the greatest name. Getting named for a prominent female American astronomer is a great way to go.

The Phoblographer: If I’m not mistaken, the work on the camera for the telescope began in 2007 when digital photography was still pretty much in its infancy in the professional sector. How did the team arrive at choosing a 3200 megapixel sensor for the camera?

Aaron Roodman: Well, we still have a bit to go. But we’re getting close. Our camera is scheduled to be finished at Stanford next year, next summer. We’ll ship it to the telescope in Chile. It’ll take some time, and it’ll take some time to get it on the telescope and working. Right now, the assembly is finished, ready to go out. We have a 10-year survey planned that would start December 2023. All night, seven day a week survey. We want, in that survey, to take images of every part of the available southern hemisphere sky, such that in three or four nights, we’ll have at least one image everywhere. Over 10 years, we’ll have over 850 images in every direction in the southern hemisphere. So that’s the starting point. Those numbers are driven by the science we want to do. Of studying dark energy, dark matter, objects of the solar system, stars of the milky way etc. That defines what we want to do. To do that, we need to have a big field of view. Telescopes have a field of view just like a camera. So we decided, to do that survey, we needed a field of view of about 10 square degrees.

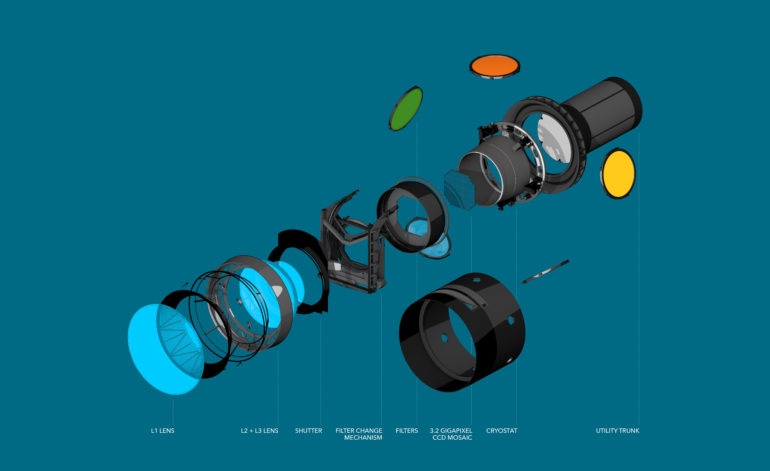

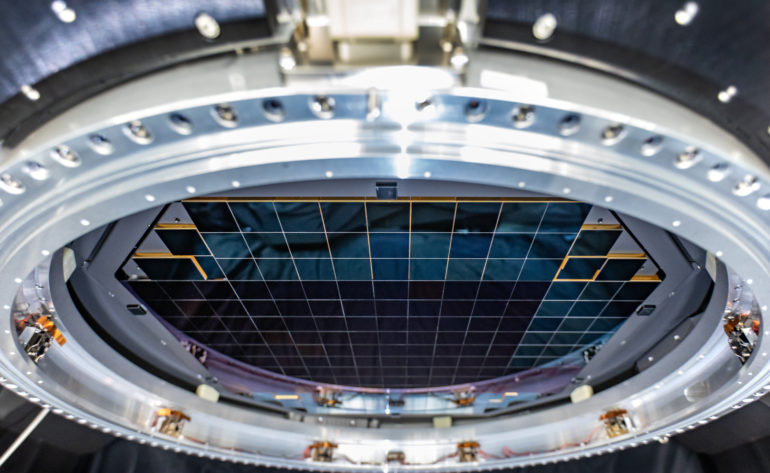

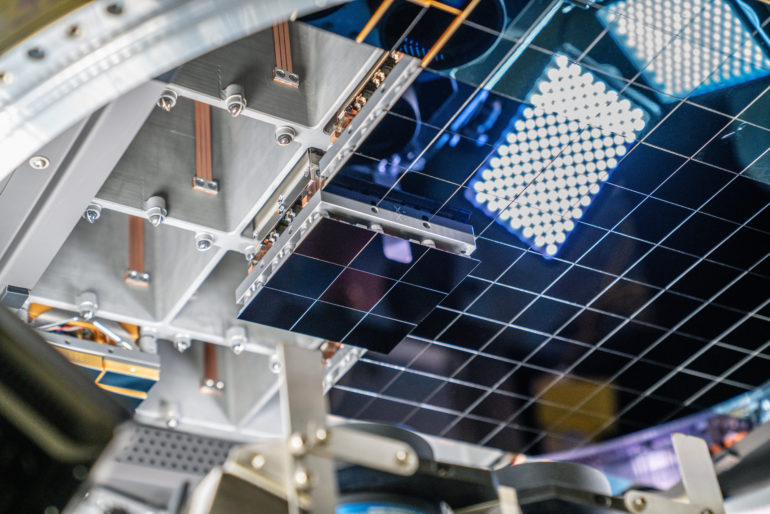

There are more technical details – you want to figure out what is the focal length of the telescope, what is the f-number of the telescope. Our f-number is 1.2, so we’re a very fast optical system. You also have to figure out what’s the size of the primary mirror. That defines how much light you collect. You need to know that with the 10 square degrees to figure out how well the mirror curvature is going to go. We figured we could use an 8.4m diameter primary mirror. That’s the biggest mirror that the place where we were going to have the mirrors built has made. They made a bunch of 8.4m diameter mirrors that fit. There’s a tunnel, a kind of a bridge at the summit in Chile, that’s 8.4 metres plus a couple of inches so you can drive through the tunnel without getting a helicopter. We figured out how big a telescope, field of view, focal length, f-number, and once you have those, that tells you how big the focal plane has to be. The other thing you need to know is the point spread function. It’s the imaging disc. How big does a star appear? How good are the images in terms of the atmospheric turbulence affecting the images? We figured we could get to a seeing disc of 0.7 arc seconds. That means that you want your pixels to be like a third of that number or so. So you oversample. We chose 0.2 arc-second pixels in 10-micron pixels. So once you know the pixel size, the size of the focal plane, divide basically, and you get 3.2 gigapixels.

Sensors for astronomy are different from sensors for photography. Near-infrared light is very important to us because we’re looking at distant galaxies that are red-shifted. A lot of the interesting light is being pushed into the near-infrared band. We’re using a technology of CCD called Back Illuminated Deep Depletion. So I’ve noticed that in the camera world, Back-Illuminated has shown up. In astronomy, that’s been the standard for a little while. In CCDs for the pixel charge transfer or for CMOS devices for the pixel amplifier, that’s always called the front side. If that is where the light hits, some of the light gets absorbed by that patterning on the chip. If you’re back-illuminated, then there’s no light absorption there. That’s why it’s very interesting for astronomy, where every photon counts.

Our devices are also designed to have very high quantum efficiency. We get to 90 or even 95%; we get to the point where we don’t lose many photons on our sensors. Now the Deep Depletion, which is not something that SLRs need, is for near-infrared light, wavelengths of about 1000 nanometres, which you can see with silicon if the silicon is thick enough. Old CCDs, or the CCDs or CMOS sensors used in regular cameras, they’re thin devices – usually 10 or 20 microns. You don’t see the near-infrared light; it goes right through the silicon. We want it, so they had to be made thicker. There’s a special processing, made out of different materials to make them thick devices. Our readout is 18 bits per pixel. If it’s packed into 18 bits, then it’s a little bit over 4 GB per image. But we usually do write it out into 32-bit integers, so then it climbs up to about 6.4 GB. It’s a lot. We estimate that when we’re observing, we’re going to collect between 10 and 15 TB of data every night. We’re closing in on exabytes. Hundreds of petabytes. That’s raw data; there will be processed data too that will be very large.

The Phoblographer: What sort of resolving power are we talking about here? One article I read said that the sensor’s “resolution is so high that you could spot a golf ball from 15 miles away.” Also, why CCD over CMOS for the sensor?

Aaron Roodman: The way we talk about resolution, we’re limited by the distortions of the atmosphere. The resolution will be set by the atmosphere. The typical number is 0.7 or 0.75 arc seconds. Optical astronomy has not moved to CMOS sensors as yet. Commercial CMOS sensors do not meet the needs of astronomical cameras. There are some astronomical grade CMOS sensors that are quite expensive. In an affiliation like ours where we don’t have to read out super, super-fast (we read out fast; we read out the entire focal plane in 2 seconds). It’s very fast for a CCD device; we don’t need faster than that. CMOS sensors have other issues that we think for this kind of light field imaging would be quite difficult to deal with. CMOS sensors have an amplifier or a little set of transistors or FATs for every pixel. That means that potentially the electronic gain and the interpixel capacitance would be different for every pixel. In principle, at least, you might have to calibrate every pixel. That looks extremely difficult at the level that we want to do. CCD has the feature that there are a limited number of amplifiers. In our case, we have highly multiplex CCDs. We’ve got 16 amplifiers, so 16 sections on each CCD which is a lot. No one has done 16 before. Typically it’s one or two, and there’s some devices now with four. I think that’s the reason why the field has not moved to CMOS sensors. Although the Nancy Grace Roman Telescope is planning to use CMOS

The Phoblographer: What have been some of the most challenging portions of the project?

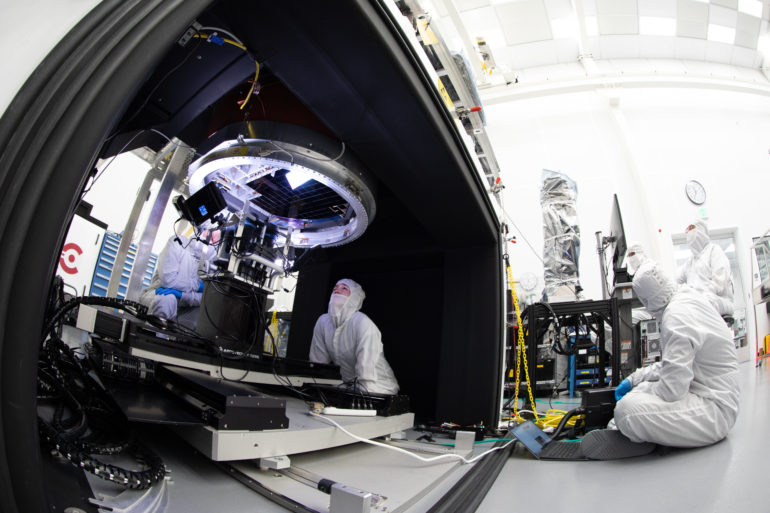

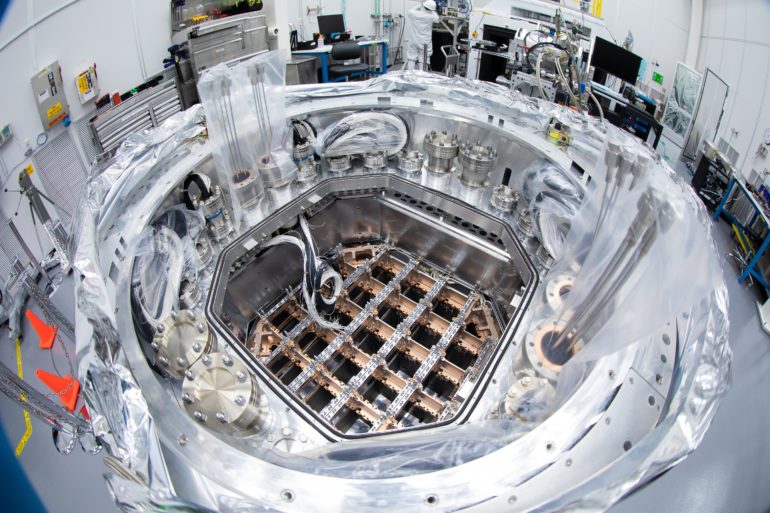

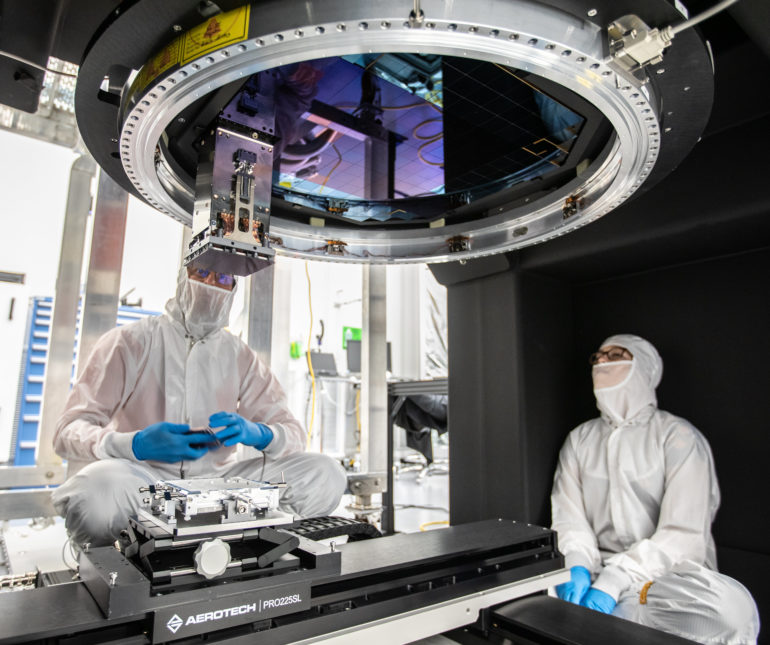

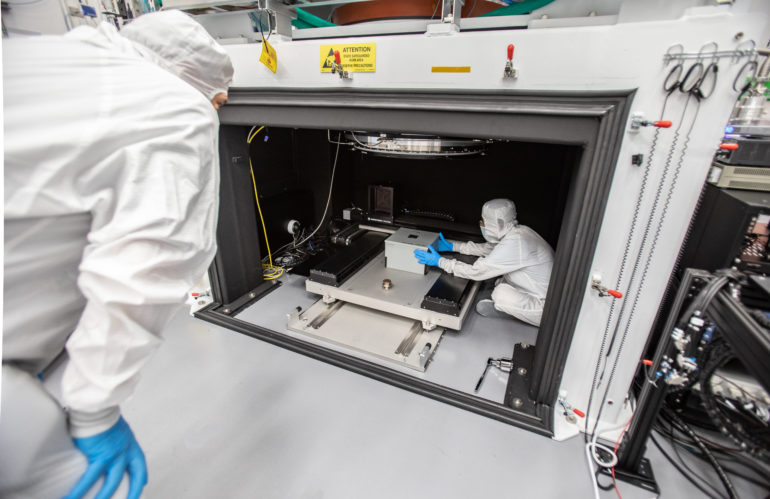

Aaron Roodman: This has been a very difficult project. Building the CCDs has been a challenge. Building the giant lenses was a challenge. One of the interesting challenges is that the amount of space that we have between CCDs is extremely small. Our focal plane is made up of 189 plus 12, so 201 CCDs. It’s about 64 cm by 64cm. That space is precious. When we designed it, the amount of space between adjacent CCDs was minimized. About less than a millimetre between them. CCDs are very delicate; you bump them, you’d break them. Assembling the focal plane such that the CCDs were very close together was extremely challenging. The CCDs are part of a subunit of 9 CCDs as a combined structure. It’s in a tower about 60cm big and 12x12cm. That unit is a combined thermal, mechanical, electrical unit. It’s got the readouts, the thermal connections to cool the CCDs to minus 100 °C. The mechanical connection, our flatness is extraordinary. Our focal plane is flat to 4 microns. That’s measured. Installing this 60cm unit that you can only grab from the back such that the CCDs don’t hit – extremely difficult. Years of effort to make that happen.

The Phoblographer: Cooling systems for operating such a large sensor must have been designed from scratch. Tell us a bit about these.

Aaron Roodman: The camera actually has a complicated, custom cooling system. We need to cool the CCDs to minus 100 °C; that’s their operating temperature. Our electronics to read out the CCD – usually called the controller – is in the vessel. You need some electronics to move the charge from each pixel over to where it’s readout. That electronics is in the vacuum chamber (or cryostat) with the CCDs. That’s an innovative step; we did that because we didn’t want to bring 3000 analog signals outside. That needs to be cooled too. So we have two thermal zones; one at about minus 30 to minus 35 °C, the other minus 100 °C. Both those systems were custom made.

The telescope itself – the mirror is temperature-controlled. It’s very important in a telescope like this to control the temperature of the mirror to keep it within 1°C of the air temperature. The mirror has its own thermal system with cooling fluids that run in tubes underneath the mirror. The substrate is thick, but lots of it is carved out. In the end, the actual material is thin, so you can cool it pretty rapidly. It has to be very dynamic because, in the course of an evening, the temperature drops a lot.

The Phoblographer: A sensor as big as this would no doubt need massive lenses to go with it. What sizes are we talking about here and what focal lengths does it have?

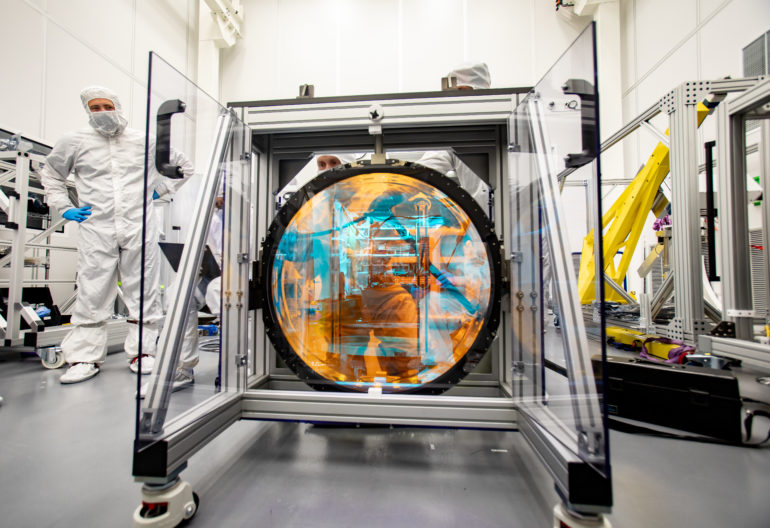

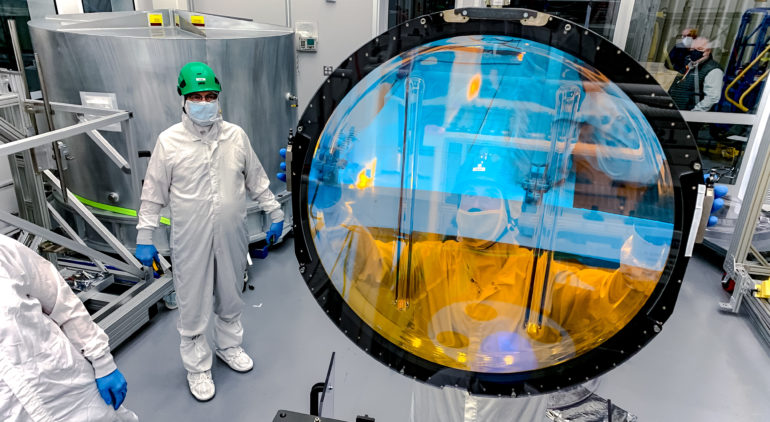

Aaron Roodman: The camera has 3 lenses, and the big lens is the one that’s the world’s largest lens for astronomy. It’s in the Guinness Book of World Records now. The camera got in… we said it was the biggest camera, but Guinness decided it had the highest resolution. They counted resolution as the number of pixels. That’s not how an astronomer would term resolution. The lens is 1.55 metres in diameter. It is massive. The other two lenses are very large too. They needed to be so big because we have a big focal plane. To get all the light to the focal plane, you’ve got light coming in from different paths; you have to have a giant lens. It’s one focal length. An important thing to note is that you could not use the camera without the mirrors. They’re meant to be used together. The only way you’re getting good images is by using them together. The whole system has three mirrors and three lenses. This is the first large three-mirror telescope ever built. When you talk about the focal length, it’s really the focal length of the whole system together – the mirrors and the lenses.

Photo: Farrin Abbott / SLAC National Accelerator Laboratory

Professional telescopes are either one or two mirrors. Often they have another mirror that’s used to move light around. But in terms of collecting light, generally, it’s one or two mirrors. Hubble Space Telescope, Giant Keck Telescopes – two mirrors. There are some that operate with one mirror, but the lenses do the job of a second mirror. We have three mirrors, not just to collect the light; it’s because we have this 10 square degrees field of view. In cameras, one of the things that people pay attention to is how good is the distortion in the corner of the image. It’s easy to be good in the centre of the images, but is there astigmatism or coma in the corner of the images. That’s something photographers care about. Good, sharp imaging lenses will deliver good corner sharpness, and we care about that too a lot. To get that over a 10 square degree field of view, we went to a design with three mirrors, and the third mirror helps enormously in correcting astigmatism in the corners.

(Jacqueline Ramseyer Orrell/SLAC National Accelerator Laboratory)

The Phoblographer: What sort of checks and corrections have to be done to ensure optical flaws and aberrations can be removed on these lenses?

Aaron Roodman: In the design, the third mirror was a big part of the system. The mirrors and the lenses have been very thoroughly tested. We also have a system called Active Optics. We can adjust the focus by moving the whole camera or by moving the secondary mirror. Those are both on hexapods, so we can move both of those and focus. We can move them sideways, or we can tilt them.

We can also change the shape of the mirrors a little bit. Enough to correct for any sag, any motion of the camera with respect to the mirrors, and we can correct for a certain level of misalignment or mis-figuring. We’ll change those dynamically to get the best image. It is definitely real-time calibration. We have some special CCDs devoted to this. They’re placed out of focus, and by looking at out of focus stars, you get a lot of information about the aberrations and so you can correct for it as you go.

(Jacqueline Orrell/SLAC National Accelerator Laboratory)

The Phoblographer: What sort of images is the telescope aiming to capture in the near future, and what will the images be used for?

Aaron Roodman: What’s special about the Rubin observatory is that we’re going to have – well, the jargon in astronomy is Deep images. Deep images are where you can see the very faintest stars of the galaxies. The idea is you’re looking deep into the heavens. We will take extremely deep images. We will see extremely dim stars and galaxies. There are images existing which are just as deep, but they are over tiny parts of the sky. What’s special about our project is that we will have images that deep, over the entire southern hemisphere sky. No one has done that before. In addition, because we’ll see every part of the sky many, many times – 850 times over 10 years – and our images are very deep, we’ll be very sensitive to changes. Any object that changes in position – it has to be a solar system object if it changes position – so asteroids if Planet Nine existed, we’d probably see it. Brightness – exploding stars so supernova, nova, stellanova. Lots of objects vary in brightness. There are all sorts of variable stars, disruptive events that happen in distant galaxies. A lot of galaxies just vary their brightness naturally because of what’s going on with the black hole in the galaxy’s centre. We’ll detect all that, and we’ll also deliver a message to the US and our partner science communities within a minute of taking every observation that says – in all these directions, there’s an object that’s changed. And then people can take that information and look at the objects they care about, with other telescopes. We’re also a finder, but we’ll be doing it over the whole sky and for very dim objects. All that together is totally unique. There’s no other project like it.

There are so many images; the only way to analyze it is via computer. We have constructed a very sophisticated, very extensive set of computing programs. Custom written, very specialized for astronomical observing that we’re doing. We call them pipelines. Those sets of pipelines will go from raw images to lists of stars and galaxies. There’s a very big group that has been developing those pipelines over many years. They are written in a combination of Python and C++; we’re a modern outfit. Little bits of other languages, but mostly those two. The computing facility in the US is going to be at SLAC. Most of it is very parallelizable software, so you can analyze a single sensor all by itself. We’ll also have an enormous database that will have information about every object. We expect to see 37 trillion objects. An object is a star or galaxy or an asteroid, and we expect to see them each 850 times. Call it a thousand. So we’ll have at least one kind of database with 37 trillion references. That is a very, very big database. We have a lot of experts in big databases – how to access them efficiently. That’s big enough that not everyone can just query it. It has to be done in a very managed way. The data analysis and data processing is a huge challenge.

The Phoblographer: Why create a telescope system in the US and then have it shipped to Chile? Also, what influenced the selection of the southern hemisphere skies as the area that the telescope system will be studying?

Aaron Roodman: We actually picked the southern hemisphere based on the location. The Southern Galactic Camp is a lot more interesting than the Northen Galactic Camp because the Magellanic Clouds are there, the centre of the galaxy is there. The south is a little more interesting, maybe, but the biggest determinant was that the site in Chile was the best site. This goes back before I joined the project in 2010. I think in 2006 / 2007 there was work to pick the site. It was mostly chosen based on excellent astronomical observing conditions. Good air, good seeing so that the stars twinkling was minimized. Good weather – you don’t want rain; you don’t want clouds. The site we’re at is a developed site. There are two other telescopes that are already there. The US has a treaty with Chile that set aside this whole region of the Southern Atacama desert; big enough for astronomy. The US National Telescope in the CTIO Observatory is nearby.

This part of Chile is among the 2 or 3 best sites in the world for optical astronomy. The Europeans have their major telescopes in this part of Chile. There are some private telescopes. This is the place to go. It is not open to the public. I think there are tours for the public that can be arranged, but I don’t think they’re offered regularly. There are visitors; it is possible to get a visit, but you need a special arrangement. There’s no visitors centre up there. That’s why it’s a US project, but we went into Chile. The sites in Arizona were not good enough. My understanding is that early in this site selection process, Mauna Kea in Hawaii was considered, but it didn’t make the final group of sites. Because even 15 years ago, my colleagues recognized that there were serious political issues with putting another big telescope on Mauna Kea. It’s probably the best site in the world; there’s a new telescope up. It just wants to start searching, but it’s embroiled in controversy.

The Phoblographer: Is this going to be accessible only to government bodies and scientists once ready, or will the public also use it to gaze into the universe at some point?

Aaron Roodman: In some ways, yes. There is a part of the project which is dedicated to education and public outreach. They will make available to the public some subset of the images, and there will be some sort of online facility to view the images. There will be things set up for school kids – K through 12 – educational projects that you could use to learn about astronomy. And I think there will be good connections to citizen science. There are a number of very successful projects using astronomical data that let people look at and interact with the data in a useful way. There have been certain astronomical objects discovered by citizens looking at these data sets. I think that there will end up being a number of such projects. But you won’t be able to see it as it comes in.

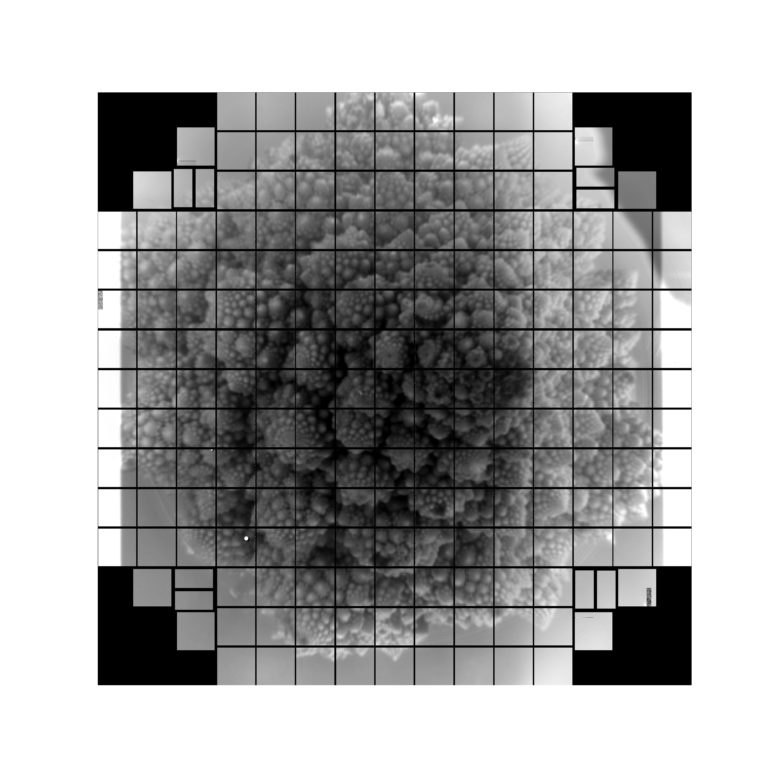

(Jacqueline Orrell/SLAC National Accelerator Laboratory)

You can explore this image at: https://www.slac.stanford.edu/~tonyj/osd/public/romanesco.html

(LSST Camera Team/SLAC National Accelerator Laboratory/Rubin Observatory)

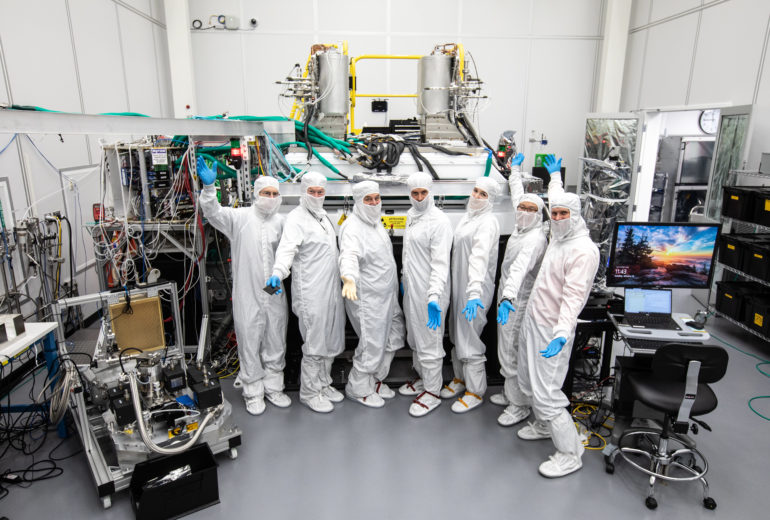

The Phoblographer: Tell us about your team. How many people have worked on this? It must feel like a huge accomplishment as it’s nearing completion.

Aaron Roodman: The project was proposed by my colleague Tony Tyson in 1998. It looked different, but it’s basically the same project. I actually don’t have a number. We’ve probably had maybe 60 or 70 people work on the camera in different ways. Some of those are engineers and technicians who built a piece of the project. Our team now is smaller, but even at SLAC plus some of our collaborating institutions, we easily have 30 to 40 people working on it. We have a montage of different people working on the camera, close to 80 people.

(Dawn Harmer/SLAC National Accelerator Laboratory)

The Phoblographer: Is this going to be shipped in parts to it’s final destination and assembled on-site in Chile, or as an assembled product?

Aaron Roodman: It’s all going to be put together in the US. We’re going to send it as two pieces. The two bigger lenses are in a unit. Those two will disassemble, and we have a crate that was already built for them. We’ll put them back in their crate. Then the whole rest of the camera will go as one big piece. We actually have already built a shipping frame to hold it. It will go in a big shipping container. We’re actually going to fly it there. The shipment is delicate enough, and we developed a lot of engineering time to develop the frame. We tested it recently. We put a metal structure the same size and weight as the camera into our shipping frame, and we shipped it to Chile. Drove it to the airport, flew it, drove it up to the mountain top, and it was instrumented with accelerometers which we studied. For example, the landing isn’t too bad. When we lift it from one place and put it down in the next, there’s a little shock there. But it was done really well; those were really small. For the whole Rubin observatory project, we have a specialist in shipment as part of the team.

The Phoblographer: Once everything is set for the inauguration, where is the system going to be pointed at first? Can it move, or will the telescope be fixed to point in a single direction?

Aaron Roodman: The telescope is actually designed to move from one direction to another very quickly. Much faster than typical telescopes of this size. I’m sure we’ll have some long meeting to decide which direction it’ll be pointed because the first image will be the PR image. There are a couple of really beautiful big galaxies in the southern hemisphere. Often you’d choose one of those. If it was in the northern hemisphere, you’d do Andromeda. In the southern hemisphere, there are a couple of other, not as well known, but large galaxies. Those are fun to look at first, so maybe one of those. But I’m sure we’ll have to come to a decision because it’ll be for PR. We have a mini camera with 9 CCDs, and we’re going to put that on a telescope first. That’s actually ready now in Chile, almost ready to go. Once the telescope and the mirrors are all there, the first images will come from the mini camera. And then it will be another 6 to 8 months before we have the full camera installed.

Photo by Farrin Abbott/SLAC National Accelerator Laboratory

All images used with permission from SLAC. Visit their website and also the Youtube and Twitter pages for more information about the Vera Rubin Observatory project.